Adversarial attacks and model uncertainty

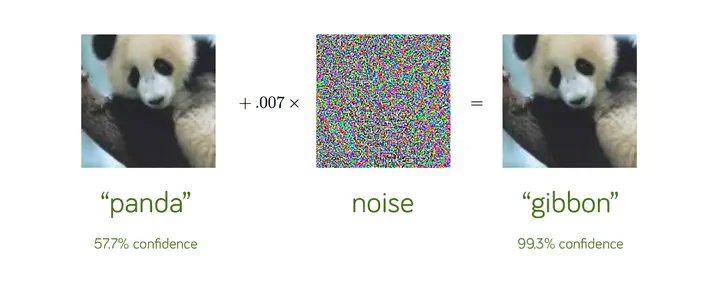

In this project, we wanted to understand how to build uncertainty aware neural networks - for classifying an image as a cat/dog, think of this as giving a confidence interval along with a probability estimate. We started out in a theoretical setting: we looked at how dropout can be used as a bayesian approximation (source). The application in mind was adversarial attacks - using uncertainty estimates, we can defend against a malicious agent trying to trick the model. We studied different measures of uncertainty used, the pros and cons as they relate to our application. Finally, we analysed recent work on using Evidence Theory (source) to get these uncertainty estimates. Turns out, this is much better against adversarial attacks. Check the project repo for more details!